AI Model Providers vs AI Infrastructure Providers: How the Market Is Structured

Introduction

The artificial intelligence ecosystem has evolved into a complex, multi-layered marketplace where different types of providers serve distinct roles in the value chain. Understanding the distinction between AI model providers and AI infrastructure providers is crucial for enterprise decision-makers, infrastructure engineers, and technology strategists navigating the AI landscape.

This market structure directly impacts how organizations build, deploy, and scale AI applications. The choice between leveraging pre-trained models from specialized providers or building infrastructure capabilities in-house affects everything from cost management and technical architecture to vendor relationships and competitive positioning. As AI workloads become increasingly demanding and specialized, the boundaries between these provider categories continue to shift, creating new opportunities and challenges for infrastructure teams.

The economic implications are substantial. Organizations that understand this market structure can make more informed decisions about resource allocation, avoid vendor lock-in scenarios, and build more resilient AI architectures. This understanding becomes even more critical as AI workloads scale from experimental proof-of-concepts to production systems handling millions of requests.

What Is the AI Provider Ecosystem?

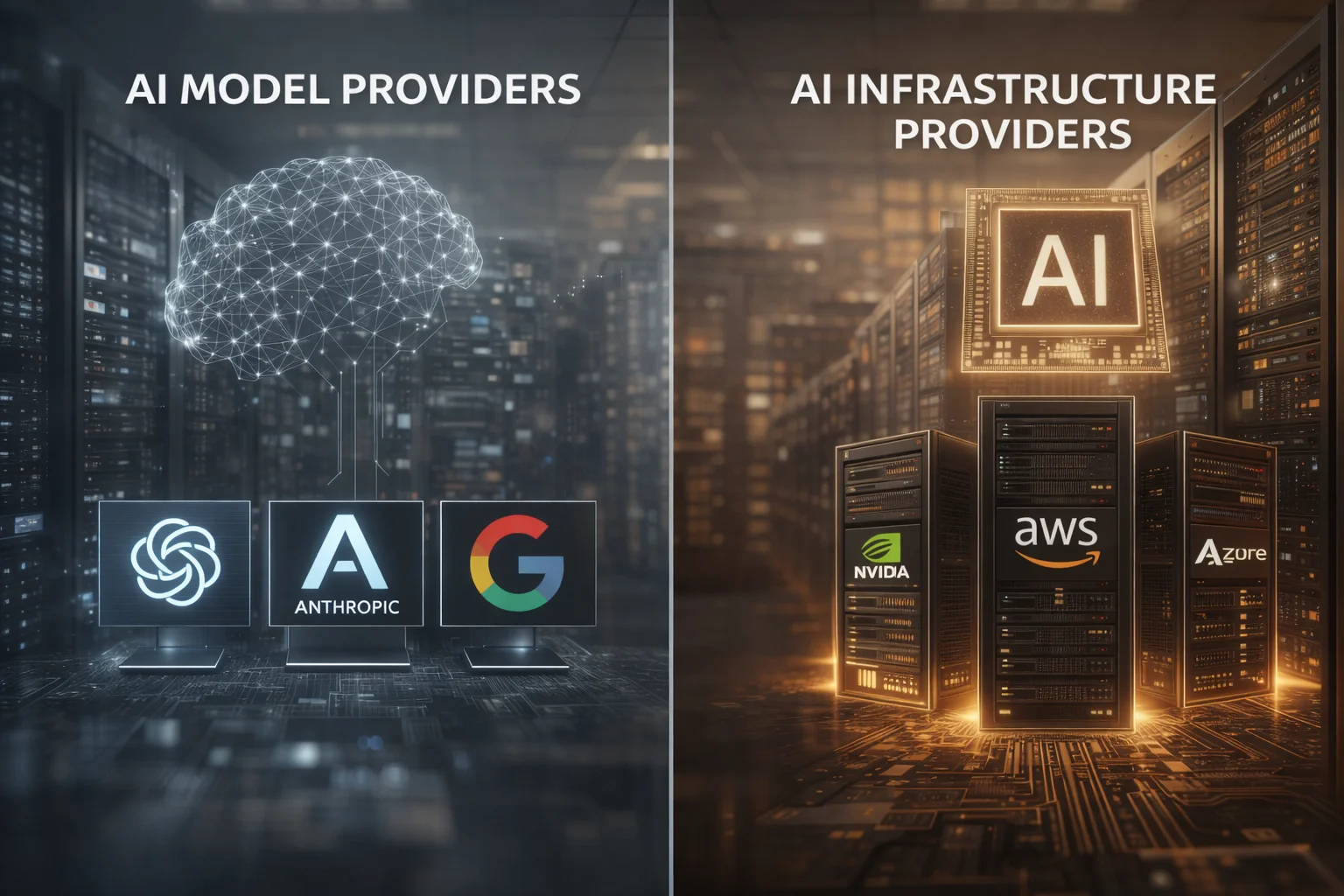

The AI provider ecosystem consists of two primary categories of companies that serve complementary but distinct functions in the AI value chain. AI model providers focus on developing, training, and serving pre-trained artificial intelligence models that can perform specific tasks like natural language processing, computer vision, or code generation. These providers handle the complex process of model development, from data collection and preprocessing to training on massive compute clusters and ongoing model optimization.

AI infrastructure providers, conversely, supply the underlying computational resources, platforms, and tools necessary to train, deploy, and operate AI models. This includes everything from raw GPU compute capacity and specialized networking to orchestration platforms and MLOps tooling. These providers focus on the operational aspects of AI rather than the intelligence itself.

The distinction mirrors traditional enterprise software patterns where application providers build on platform providers. However, AI introduces unique complexities around computational requirements, data handling, and model lifecycle management that blur these traditional boundaries. Some companies operate as hybrid providers, offering both proprietary models and the infrastructure to run them, while others maintain strict focus on one layer of the stack.

This ecosystem structure allows for specialization and efficiency. Model providers can focus on advancing the state of AI capabilities without managing massive infrastructure operations, while infrastructure providers can optimize for performance, reliability, and cost-effectiveness across diverse AI workloads. The result is a more mature market where enterprises can choose best-of-breed solutions for different aspects of their AI strategy.

How the AI Market Structure Works

The AI market operates through several interconnected layers, each serving specific functions in the overall value chain. At the foundation lies the semiconductor layer, dominated by companies like NVIDIA, AMD, and Intel, who manufacture the specialized chips required for AI computation. These chips power everything from training massive language models to running inference workloads in production.

Above the hardware layer, cloud infrastructure providers like Amazon Web Services, Microsoft Azure, and Google Cloud Platform offer virtualized access to AI-optimized compute resources. They provide not just raw GPU instances but also specialized services for distributed training, model serving, and data management. These providers abstract away the complexity of managing physical hardware while offering flexible, scalable access to AI compute resources.

The platform layer includes specialized AI infrastructure companies that build tools and services specifically for AI workloads. Companies like Databricks, Weights & Biases, and Hugging Face provide platforms for model development, experiment tracking, and deployment. These platforms integrate with underlying cloud resources while offering higher-level abstractions tailored for machine learning workflows.

At the application layer, AI model providers offer pre-trained models through APIs or hosted services. OpenAI's GPT models, Anthropic's Claude, and Google's PaLM represent this category, providing sophisticated AI capabilities without requiring customers to handle the underlying infrastructure complexity. These providers typically charge based on usage metrics like tokens processed or API calls made.

The market structure creates natural points of competition and cooperation. Infrastructure providers compete on cost, performance, and reliability, while model providers compete on accuracy, capabilities, and ease of use. However, vertical integration is common, with many companies spanning multiple layers to capture more value and control their technology stack.

Key Components and Architecture

The AI provider ecosystem consists of several critical architectural components that work together to deliver AI capabilities to end users. Understanding these components helps infrastructure teams make informed decisions about where to invest resources and how to structure their AI architecture.

Compute Infrastructure forms the foundation of the entire ecosystem. This includes specialized hardware like GPUs, TPUs, and emerging AI accelerators, along with the networking and storage systems required to support them. High-bandwidth interconnects like InfiniBand and NVLink enable the parallel processing required for training large models, while high-performance storage systems handle the massive datasets involved in AI training and inference.

Orchestration and Management Platforms provide the control plane for AI workloads. Kubernetes-based solutions like Kubeflow and proprietary platforms like Databricks' Lakehouse Platform handle job scheduling, resource allocation, and workflow management across distributed compute clusters. These platforms abstract the complexity of managing hundreds or thousands of compute nodes while providing observability and control over AI workloads.

Data Infrastructure encompasses the systems required to collect, process, and serve the data that feeds AI models. This includes data lakes, feature stores, and streaming processing systems that can handle the scale and velocity of modern AI datasets. Vector databases and embedding systems provide specialized storage for AI-native data formats, while data lineage and governance tools ensure compliance and reproducibility.

Model Development and Training Infrastructure includes the tools and frameworks used to build AI models. This spans from development environments and experiment tracking systems to distributed training frameworks like PyTorch and TensorFlow. Model registries provide versioning and governance for trained models, while automated machine learning platforms help democratize model development across organizations.

Inference and Serving Infrastructure handles the deployment and operation of trained models in production environments. This includes model serving platforms that can scale to handle millions of requests, edge inference systems for low-latency applications, and specialized hardware optimized for inference workloads. Load balancing, caching, and monitoring systems ensure reliable operation at scale.

Integration and API Management provides the interfaces between different components of the AI stack. This includes API gateways that manage access to model endpoints, integration platforms that connect AI services with existing enterprise systems, and developer tools that simplify the process of building AI-powered applications.

Use Cases and Applications

The structured AI provider ecosystem enables a wide range of use cases across different industries and technical requirements. Understanding these applications helps infrastructure teams plan for the specific demands of their AI workloads and choose appropriate provider strategies.

Enterprise Knowledge Management represents a common use case where organizations leverage large language models to process and understand internal documents, customer communications, and knowledge bases. Companies typically use model providers like OpenAI or Anthropic for the core language understanding capabilities while relying on infrastructure providers for data processing, vector storage, and API management. The architecture might include cloud-based vector databases for storing document embeddings, API gateways for managing access to external model providers, and internal compute resources for preprocessing and orchestration.

Computer Vision Applications in manufacturing, retail, and healthcare require specialized infrastructure for processing image and video data. Organizations often combine pre-trained vision models from providers like Google or Microsoft with custom infrastructure for data ingestion, preprocessing, and real-time inference. This might involve edge compute resources for low-latency processing, specialized storage systems optimized for media files, and GPU clusters for batch processing of large image datasets.

Financial Services use cases like fraud detection and risk assessment typically require both real-time inference capabilities and the ability to train custom models on proprietary data. Banks and fintech companies often maintain hybrid architectures that combine external model providers for general capabilities with internal infrastructure for training domain-specific models. The architecture emphasizes data security, model explainability, and regulatory compliance, requiring specialized tools for model governance and audit trails.

Scientific Computing applications in drug discovery, climate modeling, and materials science demand massive computational resources for training complex models. Research institutions and pharmaceutical companies often use specialized AI infrastructure providers that offer access to supercomputing-class resources, while leveraging both commercial and open-source models for different aspects of their research. The infrastructure typically includes high-performance computing clusters, specialized scientific computing frameworks, and collaboration tools for distributed research teams.

Content Creation and Media applications leverage generative AI models for producing text, images, video, and audio content. Media companies and creative agencies often use external model providers for core generation capabilities while building internal infrastructure for content management, brand safety, and workflow integration. The architecture includes content delivery networks for distributing generated media, rights management systems for intellectual property protection, and quality assurance tools for ensuring brand consistency.

Benefits and Challenges

The structured AI provider ecosystem offers significant advantages for organizations building AI capabilities, but also introduces complexity and potential risks that infrastructure teams must carefully consider.

Benefits of Specialization include access to cutting-edge capabilities without the massive investment required to develop them internally. Model providers invest billions of dollars in research and development, creating capabilities that would be prohibitively expensive for most organizations to replicate. Infrastructure providers achieve economies of scale in managing AI workloads, offering cost-effective access to specialized hardware and optimized software stacks.

Operational Efficiency improves when organizations can focus on their core competencies rather than managing the full AI stack. Companies can leverage best-of-breed solutions for different aspects of their AI architecture, potentially achieving better performance and lower costs than monolithic approaches. The ecosystem also enables faster time-to-market for AI applications, as teams can build on proven foundation models and infrastructure rather than starting from scratch.

Risk Distribution across multiple providers can improve overall system reliability and reduce single points of failure. Organizations can implement multi-provider strategies that provide redundancy and optionality, reducing the impact of individual provider outages or policy changes. This diversification also provides leverage in contract negotiations and helps avoid vendor lock-in scenarios.

Technical Challenges emerge from the complexity of integrating multiple providers and managing data flow across different systems. Organizations must handle authentication, authorization, and data governance across multiple vendor relationships. API compatibility and versioning become critical concerns, as changes in model provider APIs can break downstream applications. Performance optimization becomes more complex when orchestrating workloads across different infrastructure providers with varying characteristics.

Economic Complexity increases as organizations must manage multiple pricing models, usage patterns, and cost optimization strategies. Model providers typically charge based on usage metrics that can be difficult to predict and budget for, while infrastructure providers offer various pricing models from on-demand to reserved capacity. Understanding the total cost of ownership across the full AI stack requires sophisticated financial modeling and continuous optimization.

Vendor Management overhead grows as organizations work with multiple specialized providers, each with different service level agreements, support models, and business practices. Legal and compliance teams must manage multiple contracts, data processing agreements, and security assessments. Technical teams must maintain expertise across different provider ecosystems and stay current with rapidly evolving capabilities and best practices.

Data and Security Concerns intensify when AI workloads span multiple providers and cloud environments. Organizations must ensure consistent security policies across different systems while managing data residency requirements and regulatory compliance. Data lineage and auditability become more complex when processing spans multiple provider environments, requiring sophisticated governance frameworks and monitoring systems.

Getting Started with AI Provider Strategy

Implementing an effective AI provider strategy requires careful assessment of organizational needs, technical requirements, and long-term strategic goals. Infrastructure teams should begin by conducting a comprehensive audit of existing AI use cases and planned initiatives to understand the scope of requirements and identify patterns that might inform provider selection.

Requirements Assessment should catalog both current and anticipated AI workloads, including computational requirements, data characteristics, performance targets, and compliance constraints. Teams should evaluate whether workloads require custom model training, can leverage pre-trained models, or need hybrid approaches. Understanding data sensitivity levels, geographic requirements, and integration needs helps narrow provider options and architectural approaches.

Provider Evaluation involves establishing criteria for both model and infrastructure providers based on organizational priorities. Technical criteria include model accuracy and capabilities, API reliability and performance, supported frameworks and tools, and integration options. Business criteria encompass pricing models, contract terms, support quality, and strategic alignment. Teams should conduct proof-of-concept projects with shortlisted providers to validate technical capabilities and operational characteristics.

Architecture Planning requires designing systems that can effectively leverage multiple providers while maintaining operational efficiency and cost control. This includes establishing data flow patterns, API management strategies, and monitoring approaches that work across provider boundaries. Teams should plan for provider redundancy and failover scenarios while ensuring consistent security and governance policies across the entire AI stack.

Implementation Strategy should follow a phased approach that begins with lower-risk use cases and gradually expands to more critical applications. Starting with external model providers for non-sensitive use cases allows teams to gain experience with API integration and cost management before investing in infrastructure provider relationships. Pilot projects help validate architectural assumptions and operational procedures before scaling to production workloads.

Operational Excellence frameworks should address monitoring, cost optimization, and performance management across multiple provider relationships. Teams need dashboards that provide visibility into usage patterns, costs, and performance metrics across different providers. Automated cost optimization tools can help manage expenses across multiple pricing models, while performance monitoring ensures service level objectives are met consistently.

Risk Management strategies should address potential provider dependencies, service disruptions, and cost volatility. Teams should maintain provider diversification where practical and develop contingency plans for critical workloads. Regular business continuity testing helps ensure fallback procedures work effectively, while ongoing market analysis identifies new provider options and capabilities that might improve the overall architecture.

Key Takeaways

• The AI ecosystem operates through distinct layers, with model providers focusing on AI capabilities and infrastructure providers supplying computational resources and operational tools

• Specialization creates value by allowing organizations to access cutting-edge AI capabilities without massive internal investment in research and infrastructure

• Hybrid strategies often work best, combining external model providers for general capabilities with internal or specialized infrastructure for custom requirements and sensitive data

• Technical integration complexity increases with multiple providers, requiring sophisticated API management, data governance, and orchestration capabilities

• Cost management becomes multifaceted, involving different pricing models, usage patterns, and optimization strategies across model and infrastructure providers

• Risk mitigation requires diversification across providers and development of comprehensive business continuity plans for critical AI workloads

• Market structure continues evolving, with increasing vertical integration and new specialized providers entering different layers of the stack

• Infrastructure teams need new skills in vendor management, multi-cloud orchestration, and AI-specific operational practices to succeed in this ecosystem

• Data governance and security require consistent policies and monitoring across multiple provider relationships and cloud environments

• Success depends on strategic alignment between AI provider choices and long-term business objectives, technical capabilities, and risk tolerance